What AWS services does Tech 42 commonly use?

Summary

Tech 42 leverages the full AWS AI/ML stack, prioritizing serverless and high-performance computing to balance cost with scale. Our core toolkit revolves around Amazon Bedrock for Generative AI, SageMaker for custom model training, and ECS/EKS for containerized deployment. Beyond core infrastructure, we specialize in purpose-built AI services like Textract and Bedrock Data Automation (BDA) for document intelligence and AgentCore for agentic workflows. While we are an AWS Advanced Tier Partner, we remain technology-agnostic, integrating Open Source tools (like LangGraph) where they offer superior flexibility.

Detailed AWS Technology Breakdown

We categorize tools into five pillars: AI/ML services, compute, storage, networking, and tools/frameworks. The selection of technologies is based on the best tool for the goals of each project and customer. In some cases we will recommend against certain approaches to maximize long-term benefits and efficiencies.

With expertise across multiple cloud providers, industries, and projects, we have deep expertise with broad exposure to tools and services. This gives us the ability to consult on tool selection and quickly evaluate new tools and options.

AI/ML Services

The intelligence behind AI/ML workloads. We use these for model hosting, inference, reasoning, and intelligent processes.

- Amazon Bedrock: Accessing high-performing foundation models via API.

- Amazon SageMaker: Full lifecycle ML development and custom model training.

- Amazon Nova: Foundation models built for performance, speed, and cost-efficiency.

- Textract & BDA: Extracting data from documents for Intelligent Document Processing (IDP).

- Knowledge Bases: Enabling RAG (Retrieval-Augmented Generation) architectures.

- Open Source LLMs: Deploying models via Hugging Face and custom containers.

Compute

The processing power behind the AI models. We optimize these for latency and cost-efficiency.

- AWS Lambda: Serverless compute for event-driven AI agents.

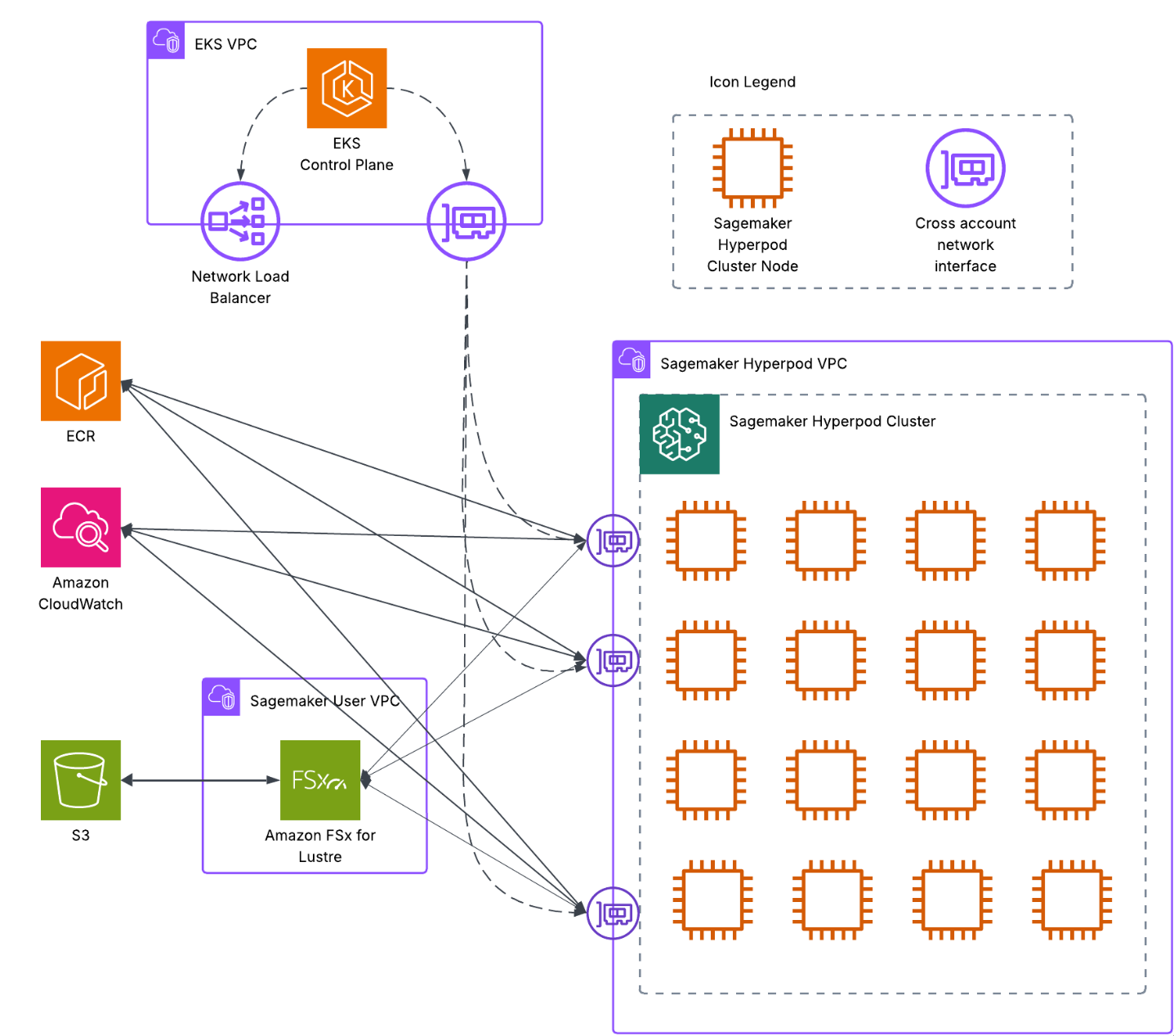

- ECS & EKS: Container orchestration for scalable microservices.

- AWS Batch & ParallelCluster: Managing heavy, High-Performance Computing (HPC) jobs.

- SageMaker Hyperpod: Resilient infrastructure for large-scale model training.

- EC2: Right-sized virtual servers (using GPU instances like P4/P5 when necessary).

Storage & Databases

The memory that enables the context for AI to succeed. We ensure your data is stored securely and retrieved it instantly.

- Amazon OpenSearch Service: Vector search for RAG applications.

- S3 & S3 Vectors: Massive scalability for data lakes and embedding storage.

- Amazon Aurora & RDS: High-performance relational databases.

- FSx for Lustre: High-speed storage for machine learning training data.

Networking & Security

The pipes and rules that enable systems to work and scale. We ensure your AI is secure, private, compliant, and scalable.

- VPC & PrivateLink: Isolating your AI environment from the public internet.

- API Gateway & ALB: Managing traffic to your models securely.

- IAM & Secrets Manager: Granular permission controls to protect sensitive data.

- EFA (Elastic Fabric Adapter): Accelerating communication between instances for HPC.

DevOps & Frameworks

The foundation that enables systems to function with transparency. We use these tools to build, deploy, and monitor.

- Infrastructure as Code: Terraform and AWS CloudFormation for scale and repeatable deployments.

- Agentic Frameworks: LangGraph and LangFuse for building and monitoring complex AI agents.

- Amazon CloudWatch: Monitoring system health and logging.

- AWS Systems Manager (SSM): Managing configuration and operational insights.

How are these deployed?

Each project carries unique requirements. As we approach a project, we prioritize goals and outputs over a specific technical approach. Here’s an example of a technical architecture we defined for Osmosis (Gulp.ai). (Read the full case study here.)